85+

Research Papers

32

Active Projects

14

Open Datasets

NeuroEvolution: Self-Optimizing Neural Networks Through Genetic Algorithms

Alex Johnson, Daniel Lee • Aug 15, 2023

This paper introduces a novel approach to neural network optimization using genetic algorithms that dynamically evolve network architectures. Our method demonstrates significant improvements in both training speed and model performance across multiple domains.

Multi-Modal Attention Mechanisms for Improved Visual Reasoning

Sarah Chen, Robert Patel • Nov 2, 2023

We propose a new attention mechanism that integrates visual and textual information more effectively than previous approaches. Our results show a 24% improvement on the Visual QA benchmark and 18% on the CLEVR dataset.

Transfer Learning Optimization for Low-Resource Languages

Jamal Ibrahim, Luis Garcia, Emma Schmidt • Sep 27, 2023

This research addresses the challenges of applying NLP to low-resource languages through specialized transfer learning techniques. We demonstrate state-of-the-art results on 12 previously underrepresented languages.

Quantum-Inspired Neural Networks for Combinatorial Optimization

Jennifer Wu, Thomas Müller • Oct 19, 2023

We present a novel neural network architecture inspired by quantum computing principles to solve complex combinatorial optimization problems. Our approach significantly outperforms traditional methods on traveling salesman and graph coloring tasks.

Hierarchical Reinforcement Learning for Robotic Control

Marcus Zhang, Priya Patel, David Chen • Jan 15, 2024

This paper presents a hierarchical approach to reinforcement learning that enables robots to learn complex, multi-stage tasks more efficiently. Our method shows 35% faster learning and 42% better performance on manipulation tasks.

Foundation Models for Scientific Discovery: Protein Structure Prediction

Elena Rodriguez, Samantha Kim, Oliver Brown • Feb 28, 2024

We introduce a new foundation model architecture specifically designed for scientific discovery, focusing on protein structure prediction. Our approach leverages both evolutionary and physical constraints to achieve state-of-the-art accuracy.

Federated Learning With Privacy Guarantees for Healthcare Applications

Jordan Lee, Aisha Hassan, Ryan Mitchell • Mar 10, 2024

This paper presents a novel federated learning approach with formal privacy guarantees suitable for sensitive healthcare data. We demonstrate how multi-party computation can be combined with differential privacy to enable collaborative model training without data sharing.

Emergent Abilities in Large Language Models: Analysis and Prediction

Nadia Williams, Kojo Asante, Wei Zhang • Apr 5, 2024

Our research provides a framework for understanding and predicting emergent abilities in large language models. By analyzing the correlation between model size, training data, and task performance, we identify key threshold points where new capabilities emerge.

Key Research Areas

Our teams are focused on pushing boundaries in these critical domains

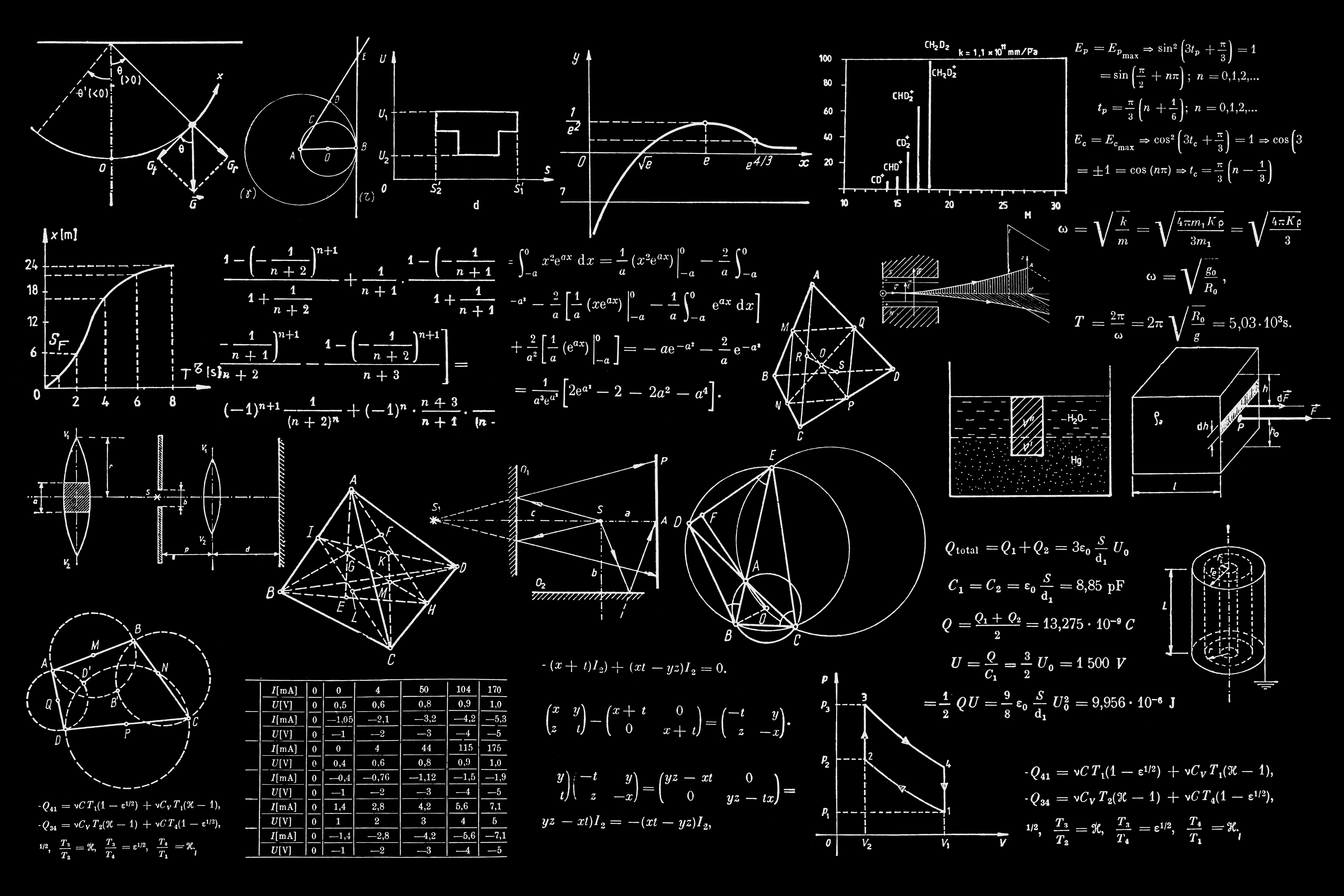

Foundational Model Research

Advancing the core capabilities of large-scale AI models through innovations in architecture, training methodologies, and optimization techniques.

Trustworthy AI

Developing methods to make AI systems more reliable, safe, fair, and aligned with human values and intentions.

Multimodal Intelligence

Creating AI systems that can seamlessly understand and generate content across multiple modalities including text, images, audio, and video.

AI for Scientific Discovery

Applying AI to accelerate breakthroughs in science and medicine through hypothesis generation, experiment design, and data analysis.

Human-AI Collaboration

Exploring new paradigms for humans and AI systems to work together, enhancing human capabilities while addressing AI limitations.

Embodied Intelligence

Building AI systems that can perceive, reason about, and act in the physical world through robotics and simulation.